Info! Please, check our previous work on domain adaptation of virtual and real environments here.

Article

[row][column size=’col-md-4′ ]

[column size=’col-md-8′ ]

Bibtex:

@inproceedings{RosCVPR16,

author={German Ros and Laura Sellart and Joanna Materzynska and David Vazquez and Antonio Lopez},

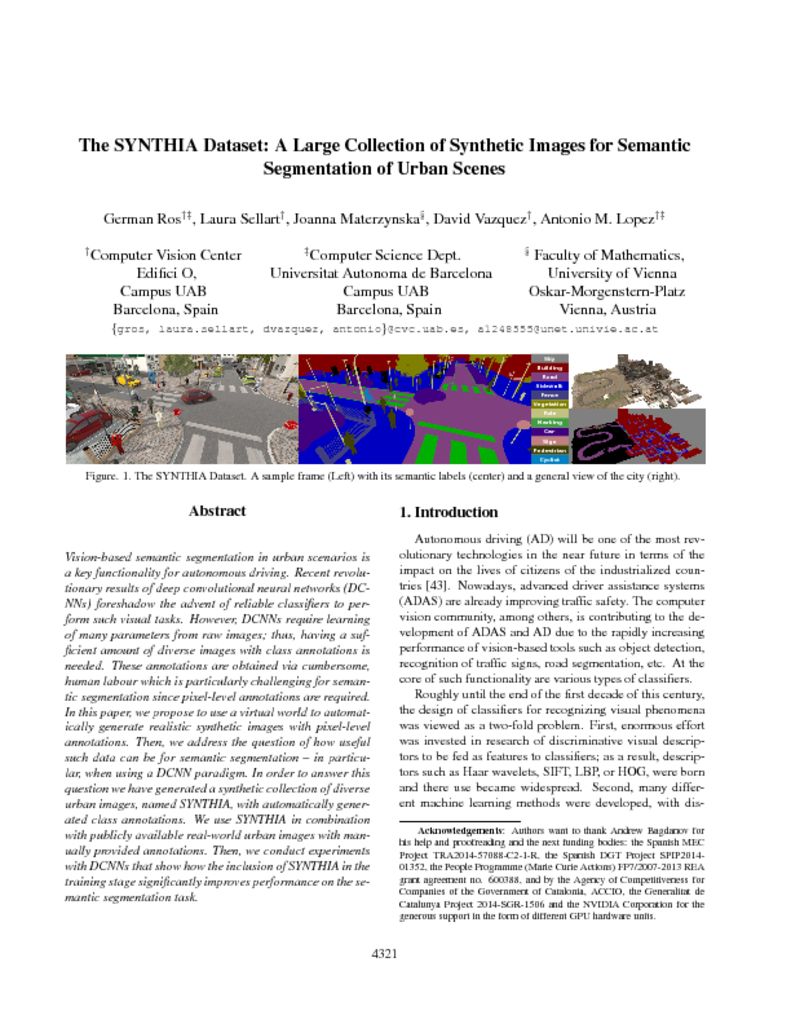

title={ {The SYNTHIA Dataset}: A Large Collection of Synthetic Images for Semantic Segmentation of Urban Scenes},

booktitle=CVPR,

year={2016}

}

[/column][/row]

[row][column size=’col-md-4′ ]

[column size=’col-md-8′ ]

Bibtex:

@inproceedings{HernandezBMVC17,

author={Daniel Hernandez-Juarez and Lukas Schneider and Antonio Espinosa and David Vazquez and Antonio Lopez and Uwe Franke and Marc Pollefeys and Juan Carlos Moure},

title={ Slanted Stixels: Representing San Francisco’s Steepest Streets},

booktitle=BMVC,

year={2017}

}

[/column][/row]