SYNTHIA-AL (ICCV Workshops 2019)

Description:

Dataset for active learning purposes. This is a video stream generated at 25 FPS. The classes considered in this dataset are void, sky, building, road, sidewalk, fence, vegetation, pole, car, traffic sign, pedestrian, bycicle, lanemarking, and traffic light. The provided ground truth includes instance segmentation, 2D bounding boxes, 3D bounding boxes and depth information!

For further details, please consult the following README.

Data packages:

| Name | Package |

|---|---|

| SYNTHIA-AL-Train | SYNTHIA-AL-Train (14041 downloads) |

| SYNTHIA-AL-Test | SYNTHIA-AL-Test (8710 downloads) |

| README | SYNTHIA-AL-README (6199 downloads) |

SYNTHIA-SF (BMVC 2017)

Description:

Video sequences subsets acquired at 5 FPS. There are 6 sequences featuring different scenarios and traffic conditions. There are 2224 images with associated ground truth used to check the accuracy of Slanted Stixels in our BMVC paper. For each sequence we provide useful information such as: left and right image, ground truth for semantic segmentation, instance segmentation, depth, and calibration parameters. The semantic classes are Cityscapes compatible, we consider: void, road, sidewalk, building, wall, fence, pole, traffic light, traffic sign, vegetation, terrain, sky, person, rider, car, truck, bus, train, motorcycle, bicycle, road lines, other, road works.

Related videos: slanted stixels, BMVC 2017 presentation.

Data packages:

| Name | Package |

|---|---|

| SYNTHIA-SF-BMVC2017 | SYNTHIA-SF-BMVC2017 (7386 downloads) |

SYNTHIA-RAND (CVPR16)

Description:

This is the set containing the original 13,407 images used to perform training and domain adaptation of the models presented in our CVPR’16 paper. These images are generated as random perturbation of the world and therefore do not have temporal consistency (this is not a video stream). These images have annotations for 11 basic classes and do not have annotations for instances. The classes are: void, sky, building, road, sidewalk, fence, vegetation, pole, car, sign, pedestrian, cyclist.

Related videos: depth groundtruth, semantic segmentation groundtruth, RGB 360 deg.

Data packages:

| Name | Package |

|---|---|

| SYNTHIA-RAND-CVPR2016 (14665 downloads) |

SYNTHIA-RAND-CITYSCAPES (CVPR16)

Description:

It is a new set containing 9,000 random images with labels compatible with the CITYSCAPES test set. In addition to the CITYSCAPES test classes, we also provide other interesting ones such as lanemarking. The list of classes is: void, sky, building, road, sidewalk, fence, vegetation, pole, car, traffic sign, pedestrian, bicycle, motorcycle, parking-slot, road-work, traffic light, terrain, rider, truck, bus, train, wall, lanemarking. These images are generated as random perturbation of the virtual world, therefore no temporal consistency is provided (this is not a video stream). This set contains groundtruth for instances!

Data packages:

| Name | Package |

|---|---|

| SYNTHIA-RAND-CITYSCAPES (27043 downloads) |

SYNTHIA VIDEO SEQUENCES (CVPR16)

Description:

Video subsets acquired at 5 FPS. There are 7 sequences featuring different scenarios and traffic conditions. Each of them is divided into different sub-sequences for commodity. Each sub-sequence consists of the same traffic situation but under a different weather/illumination/season condition. The current sub-sequences are: Spring, Summer, Fall, Winter, Rain, Soft-rain, Sunset, Fog, Night and Dawn. Each of these sub-sequences contains around 8,000 (1,000 x 8) images with associated ground truth. For each sub-sequence we provide useful information such as: 8 views, ground truth for semantic segmentation, instance segmentation, global camera poses, depth, and calibration parameters. In this case the semantic classes are 13: misc, sky, building, road, sidewalk, fence, vegetation, pole, car, sign, pedestrian, cyclist, lane-marking.

Data packages:

Citation:

When using or referring to the SYNTHIA-CVPR’16 in your research, please cite our CVPR 2016 paper [ pdf ], please check our terms of use.

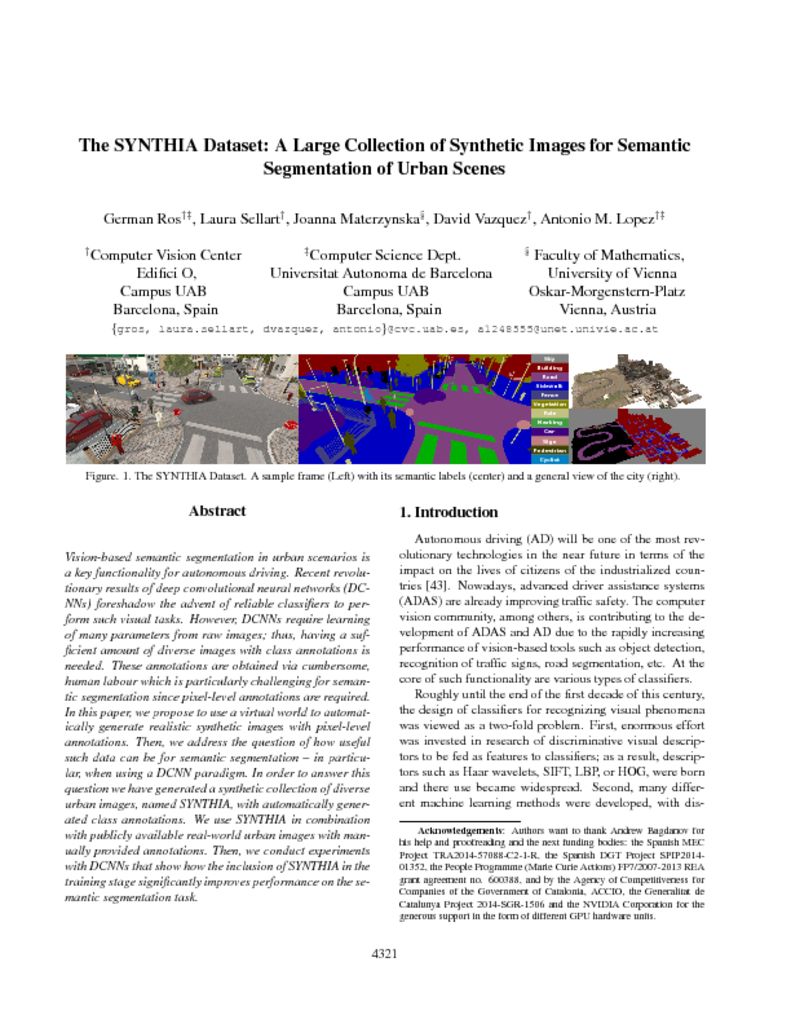

@InProceedings{Ros_2016_CVPR,

author = {Ros, German and Sellart, Laura and Materzynska, Joanna and Vazquez, David and Lopez, Antonio M.},

title = {The SYNTHIA Dataset: A Large Collection of Synthetic Images for Semantic Segmentation of Urban Scenes},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2016}

}

When using or referring to the SYNTHIA-SF in your research, please cite our BMVC 2017 paper [ pdf ], please check our terms of use.

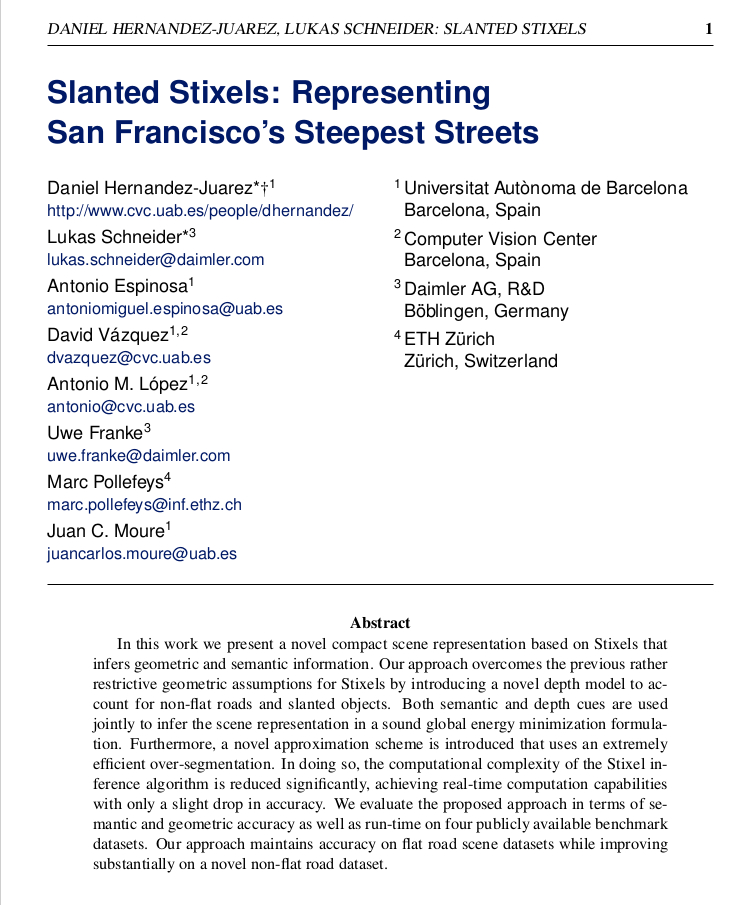

@InProceedings{HernandezBMVC17,

author = {Hernandez-Juarez, Daniel and Schneider, Lukas and Espinosa, Antonio and Vazquez, David and Lopez, Antonio M. and Franke, Uwe and Pollefeys, Marc and Moure, Juan Carlos},

title = {Slanted Stixels: Representing San Francisco’s Steepest Streets},

booktitle = {British Machine Vision Conference (BMVC), 2017},

year = {2017}

}

When using or refferring to the SYNTHIA-AL in your research, please cite our ICCV Wokshops 2019 paper [ pdf ].

@InProceedings{bengarICCVW19,

author = {Zolfaghari Bengar, Javad and Gonzalez-Garcia, Abel and Villalonga, Gabriel and Raducanu, Bogdan and Aghdam, Hamed H and Mozerov, Mikhail and Lopez, Antonio M and van de Weijer, Joost},

title = {Temporal Coherence for Active Learning in Videos},

booktitle = {The IEEE International Conference in Computer Vision, Workshops (ICCV Workshops)},

year = {2019}

}